NAS_Survey_Notes_Ⅰ

Elsken, T., Metzen, J. H., & Hutter, F. (2019). Neural Architecture Search. 20, 63–77. https://doi.org/10.1007/978-3-030-05318-5_3

First Section: Introduction

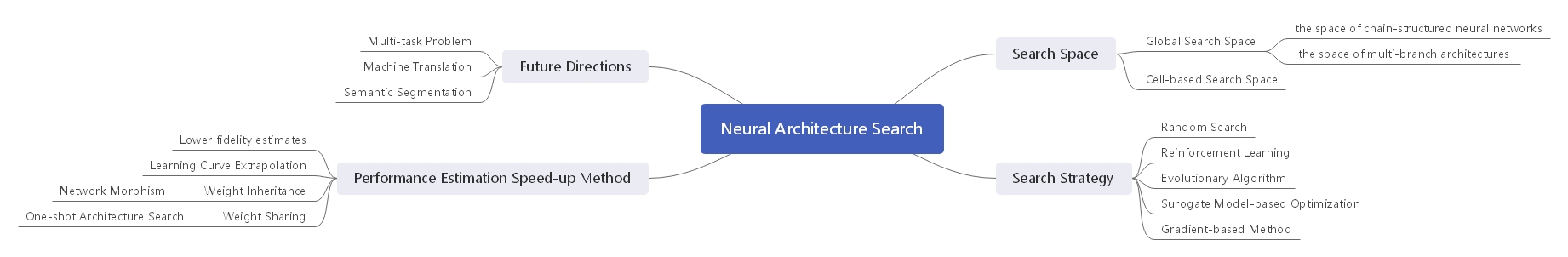

NAS (Neural Architecture Search) methods can be categorized to three dimensions: search space, search strategy, and performance estimation strategy.

A search strategy selects an architecture A from a predefined search space の,The architecture is passed to a performance estimation strategy, which returns the estimated performance of A to the search strategy.

- Search Space: The search space defines which architectures can be represented in principle.

- Search Strategy: The search strategy details how to explore the search space (which is often exponentially large or even unbounded)

- Performance Estimation Strategy: Performance Estimation refers to the process of estimating this performance: the simplest option is to perform a standard training and validation of the architecture on data, but this is unfortunately computationally expensive and limits the number of architectures that can be explored. Much recent research therefore focuses on developing methods that reduce the cost of these performance estimations.

NAS(Neural Architecture Search)神经网络结构搜索方法可以分为三个维度:搜索空间,搜索策略和性能评估策略

搜索策略通过搜索预先定义好的搜索空间,获得候选结构,然后候选结构经过性能评估策略的评估,返回其对应的评估性能,之后反复迭代上述过程,直到选出符合要求性能的网络结构。

搜索空间定义了理论上能够表示的神经网络结构。

搜索策略是指如何探测搜索空间(搜索空间比较大,甚至没有边界)

性能评估策略,则是评估候选网络结构的性能,最简单的方式就是训练和验证这个网络结构,但这样会耗费大量的计算,而且比较耗时,所以需要探索新的方法来减少性能评估的时间和计算开销。

Second Section: Search Space

The space of chain-structured neural networks is a relatively simple search space. It is parameterized by (Ⅰ)the (maximum) number of layers n (possibly unbounded); (Ⅱ)the type of operation every layer executes; (Ⅲ)hyperparameters associated with the operation.

The space of multi-branch architectures is complex search space. As for this multi-branch architectures , we can search for such motifs, dubbed cells or blocks, respectively, rather than for whole architectures. Zoph et al. (2018) optimize two different kind of cells: a normal cell that preserves the dimensionality of the input and a reduction cell which reduces the spatial dimension. The final architecture is then built by stacking these cells in a predefined manner. This cell-based search space has three major advantages compared with the whole search space:

- The size of the search space is drastically reduced since cells usually consist of significantly less layers than whole architectures.

- Architectures built from cells can more easily be transferred or adapted to other data sets by simply varying the number of cells and filters used within a model.

- Creating architectures by repeating building blocks has proven a useful design principle in general.

However, a new design-choice arises when using a cell-based search space, namely how to choose the macro-architecture: how many cells shall be used and how should they be connected to build the actual model?And one direction of optimizing macro-architectures is the hierarchical search space.

链结构神经网络的空间是一个相对简单的搜索空间。它的参数由以下三部分组成:(Ⅰ)(最大)层数n(可能无界); (二)每层执行的操作类型; (Ⅲ)与操作相关的超参数。

多分支架构的空间是复杂的搜索空间。对于这种多分支架构,我们可以分别搜索单元格或块,而不是整个架构。 Zoph等人。 (2018)优化两种不同类型的单元:保持输入维度的正常单元和减小空间维度的还原单元。然后通过以预定义的方式堆叠这些单元来构建最终的体系结构。与整个搜索空间相比,这种基于单元的搜索空间具有三大优势:

1.搜索空间的大小大大减少,因为单元的层数往往比整个体系结构层数少得多。

2.通过简单地改变模型中使用的单元和过滤器的数量,可以更容易地将从单元构建的架构转移或适应其他数据集。

3.通过重复构建块来创建体系结构已经被证明是一种有用的设计原则。然而,当使用基于单元的搜索空间时,出现了一种新的设计选择,即如何选择宏架构:应该使用多少个单元以及如何连接它们来构建实际模型?优化宏架构的一个方向是使用分层搜索空间。

Third Section: Search Strategy

Many different search strategies can be used to explore the space of neural architectures, including random search, Bayesian optimization, evolutionary methods, reinforcement learning (RL), and gradient-based methods.

Real et al. (2019) conduct a case study comparing RL, evolution, and random search (RS), concluding that RL and evolution perform equally well in terms of final test accuracy, with evolution having better anytime performance and finding smaller models. Both approaches consistently perform better than RS in their experiments, but with a rather small margin: RS achieved test errors of approximately 4% on CIFAR-10, while RL and evolution reached approximately 3.5%.

许多不同的搜索策略可用于探索神经架构的空间,包括随机搜索,贝叶斯优化,进化方法,强化学习(RL)和基于梯度的方法。

Real等人(2019)比较RL,进化算法和随机搜索(RS)这三个算法,得出结论:RL和进化算法在最终测试准确性方面表现同样良好,并且进化算法具有更好的性能,而且找到更小的模型。 两种方法在实验中始终表现优于RS。

Fourth Section: Performance Estimation Speed-up Strategy

| Speed-up method | How are speed-ups achieved? |

|---|---|

| Lower fidelity estimates 低保真估计 |

Training time reduced by training for fewer epochs, on subset of data, downscaled models, downscaled data. 通过训练更少的阶段,数据子集,缩减模型,缩减的数据来减少训练时间。 |

| Learning Curve Extrapolation 学习曲线外推 |

Training time reduced as performance can be extrapolated after just a few epochs of training 在几个训练阶段完成之后推断根据学习曲线推断模型性能进而减少训练时间 |

| Weight Inheritance / Network Morphisms 权重继承或网络态射 |

Instead of training models from scratch, they are warm-started by inheriting weights 并不是从头开始训练模型,而是继承了权重,可以减少训练次数 |

| One-shot Models/ Weight Sharing one-shot模型或权重共享 |

Only the one-shot model needs to be trained; its weights are then shared across different architectures that are just subgraphs of the one-shot model. 只需要训练一个one-shot模型,它的权重被不同的结构所使用,这些不同的结构可以看作是one-shot模型的子图 |